Apple unveiled the iPhone 8s and iPhone X today with a new feature that, on the surface, looks amazing. Building on last year’s “Portrait Mode” that faked a lower depth of field than a small lens is physically capable of, this new addition is called “Portrait Lighting” which, in theory, re-lights a photo like it was taken in a professional studio.

By analyzing the information the phone gets from two lenses, it is able to create a map of distance data. This information, combined with facial recognition software (which comes with a whole other can of worms), will re-light the scene for you. Apple suggests this is akin to professional portrait lighting. Presumably this means flattering light and here is where things get dicey.

By analyzing the information the phone gets from two lenses, it is able to create a map of distance data. This information, combined with facial recognition software (which comes with a whole other can of worms), will re-light the scene for you. Apple suggests this is akin to professional portrait lighting. Presumably this means flattering light and here is where things get dicey.

Flattering to whom?

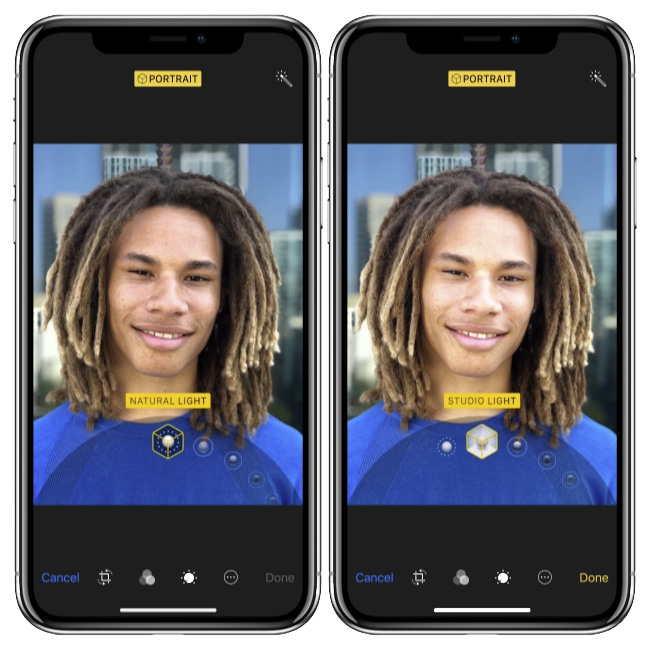

The trouble is these algorithms aren’t neutral. Cameras already aren’t neutral. (Even film wasn’t neutral.) If you have dark skin, you know this. In the case of analyzing geometry what will the phone do next? Make cheekbones look higher? Noses thinner? Maybe. Will it distinguish between a Northern European face and an Asian face and “move” the lighting accordingly? Maybe. Will it lighten skin on the assumption that lighter is better? According to Apple’s publicity, yes. This is directly from Apple’s site showing the difference between natural light and the “improved” studio light effect:

It is also possible the phone will adjust based on what we tell the phone we like. What happens when we humans, filled with our insecurities born out of a culture that devalues people of color, get to adjust how we look in-camera until we are happy? Or until we think it will make other people happy?

It is also possible the phone will adjust based on what we tell the phone we like. What happens when we humans, filled with our insecurities born out of a culture that devalues people of color, get to adjust how we look in-camera until we are happy? Or until we think it will make other people happy?

There are limits to how much the iPhone will be able to change. Despite all the typical crowing about “the most advanced processor yet,” it won’t be able to redraw you — or the light. This, unfortunately, is more bad news. Last year’s portrait mode, which faked the depth of field people love in portrait photography, did a crude job of it. Optics are complicated. Blurring is easy. Look at the edges of these photos and you’ll find odd little halos that remind me of the eclipsed sun’s corona. A lot of folks don’t notice. A lot don’t care. The same will be true with this adjustment — a computer’s ham-handed attempt to lighten and darken parts of the image which, in the end, looks very different than actual lighting.

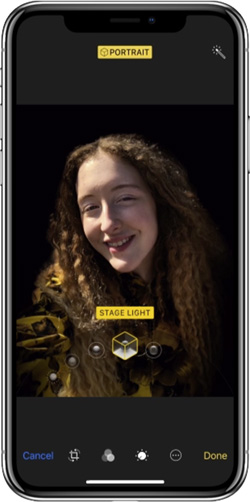

Even the best case scenario presented by Apple today looked a little amateurish. Folks with dark hair should prepare for lots of floating face images in Apple’s “Stage Lighting” mode.

Even the best case scenario presented by Apple today looked a little amateurish. Folks with dark hair should prepare for lots of floating face images in Apple’s “Stage Lighting” mode.

But there is something even worse lurking under the surface than lowered aesthetic standards and the effects of unconscious bias on beauty standards. The loss of objectivity.

Photos are never truly objective, but they’ve been close. They are framed and timed and, if you bring them into Photoshop, all bets are off. But still, they have some truth to them, especially at the moment they are taken. Light is generally recorded at a specific moment. It may be filtered in a way that favors white folks, but it’s mostly accurate in what it records. What happens when a company like Apple decides to alter the reality of the picture before it is even captured? This is about more than adding some Snap Chat bunny ears. What happens if a photo is taken with an iPhone 8s by a reporter — at a historic moment? Can we trust it?

I am aware that eventually none of this will matter. In time, cameras will become a technology of the past, supplanted by devices that we will still probably call cameras. They will however, be something else. They will record the 3D space and color data and texture and light to allow you to render out your images, adjusting and readjusting the image until your 50th birthday party looks like your 30th, painted by Rembrandt and bearing no resemblance to the event itself.

When we can do this, what will happen to our memories? Will they be supplanted too? Or will they just fade, obscured by beautiful photographs that, in the end, recorded nothing?